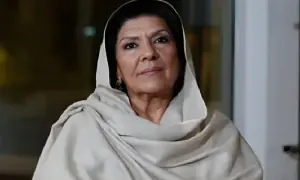

Targeted by deepfake nudes, this teen now helps others stay safe

2 min readWhen Elliston Berry, 14 at the time, discovered a classmate had created and shared a deepfake nude image of her, she didn’t know where to turn for help or how to get the images removed from social media.

Now 16, Berry is working to ensure other teens don’t face the same trauma.

Berry helped develop an online training course to educate students, parents, and school staff about non-consensual, explicit deepfake image abuse.

The initiative was created in partnership with cybersecurity firm Adaptive Security and Pathos Consulting Group.

The programme comes amid a rise in AI-driven sexual harassment, with tools that make creating realistic deepfake images of minors and adults increasingly easy.

Just this week, Elon Musk’s xAI faced criticism after its AI chatbot Grok was repeatedly used to generate sexualised images of women and minors. xAI has since limited the chatbot’s image-generation capabilities.

According to research by the non-profit Thorn, one in eight US teens reports knowing someone who has been targeted by nude deepfakes.

The Take It Down Act, signed into law last year with advocacy from Berry, makes it a crime to share non-consensual explicit images — real or AI-generated — and requires platforms to remove such content within 48 hours of notification.

“One of the situations we ran into was a lack of awareness and a lack of education,” Berry told CNN about the Texas high school where she was harassed.

“They were more confused than we were, so they weren’t able to offer any comfort or protection. That’s why this curriculum is so important … it focuses on educators so they can help and protect victims.”

The 17-minute online course is designed for middle and high school students, teachers, and parents.

It covers recognising AI-generated deepfakes, understanding deepfake sexual abuse and sextortion, and includes resources from RAINN as well as legal guidance under the Take It Down Act.

Sextortion — where victims are coerced into sending explicit images and then blackmailed — has affected thousands of teens and led to multiple suicide deaths.

“It’s not just for potential victims, but also potential perpetrators,” said Adaptive Security CEO Brian Long.

“They need to understand this isn’t a prank. It’s illegal and extremely harmful.”

Berry said it took nine months to remove her own images from social media.

“I know a handful of girls this has happened to just in the past month,” she said.

“It is so scary, especially if no one knows what we’re handling. So it’s super important to educate and have conversations.”

Adaptive Security is making the course available for free to schools and parents.

For the latest news, follow us on Twitter @Aaj_Urdu. We are also on Facebook, Instagram and YouTube.

Comments are closed on this story.